Context Cascade and the Attention Economy: Why Smarter AI Still Needs Scaffolding

The attention economy applies to AI the same way it applies to humans. Context windows are finite. Progressive disclosure isn't a nice-to-have - it's the fundamental constraint.

Here’s a bet I’m willing to make about the next generation of AI models.

If Claude 6 ships with the same context window but dramatically improved intelligence, my architecture becomes more valuable. If Claude 6 ships with a 6-million-token context window, I’m wrong about everything.

I don’t think I’m wrong.

The Attention Economy Applies to AI Too

We talk about the attention economy for humans. Information overload. Notification fatigue. The constant battle for eyeballs. Your attention is finite, so systems compete for it.

The same constraint applies to AI. Just with different terminology.

Context windows are attention budgets. GPT-4 gets ~128k tokens. Claude gets 200k. That sounds enormous until you realize what production AI work actually requires.

Load a codebase. Add documentation. Include conversation history. Inject rules and constraints. Provide examples. Reference prior decisions.

Your 200k tokens evaporate fast.

I’ve written about this before. The naive approach - dump everything into context upfront - doesn’t scale. But I didn’t explain why the constraint exists or why it won’t disappear with smarter models.

Now I will.

Why Smarter Models Don’t Solve This

There’s a persistent belief in AI circles that model improvements will eventually make context management irrelevant. Just wait for GPT-5. Wait for better attention mechanisms. Wait for infinite context.

This misunderstands the problem.

The constraint isn’t model capability. It’s information theory.

Consider what happens when you load 200k tokens of context. The model doesn’t process them equally. Attention mechanisms create what I call context gravity - information at certain positions (typically the beginning and end of context) gets weighted more heavily than information in the middle.

More context doesn’t mean better understanding. Past a certain point, it means worse understanding. The signal drowns in noise.

This is why researchers observe the “lost in the middle” phenomenon. Put critical information in the middle of a long context window and models miss it. Not because they’re dumb. Because attention is fundamentally a filtering mechanism, and filters have limits.

Smarter models with the same context architecture will have the same problem. They might be smarter about what they miss, but they’ll still miss things.

Progressive Disclosure as Architecture

Here’s the fix: don’t load everything. Load what’s needed, when it’s needed.

This principle has a name in interface design: progressive disclosure. Show users basic information first. Reveal complexity only when they ask for it. Don’t overwhelm with everything at once.

The same principle applies to AI context. I call the implementation Context Cascade.

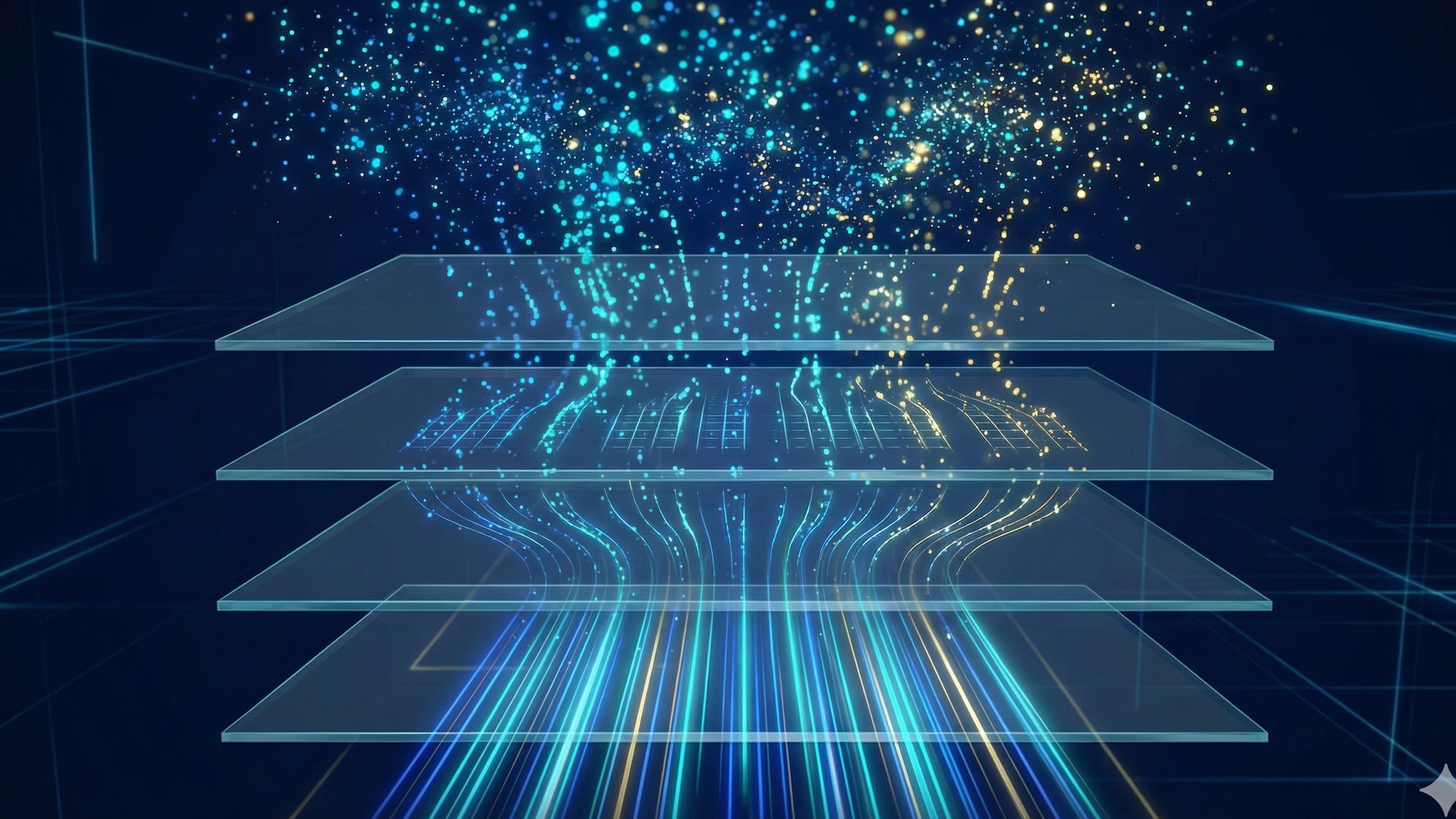

The architecture looks like this:

Level 1: Playbooks (always loaded)

~2k tokens - high-level routing logic

|

v

Level 2: Skills (loaded on trigger)

~500-2k tokens each - specific capabilities

|

v

Level 3: Agents (loaded by skills)

~1-5k tokens each - specialized workers

|

v

Level 4: Commands (embedded in agents)

~200-500 tokens each - atomic operations

Only Level 1 loads initially. Everything else loads dynamically based on the task.

The result: 90%+ context savings compared to loading everything upfront.

But the real insight isn’t the token savings. It’s that the hierarchy encodes relevance.

When you ask for code review, the system loads code review skills, which load code review agents, which include code review commands. You never load deployment logic. You never load research capabilities. You never load documentation patterns.

The architecture knows what’s relevant because relevance is built into the structure.

The Parallel to Human Cognition

This should sound familiar.

Humans don’t load all their knowledge into working memory for every task. Working memory is tiny - maybe 4-7 chunks. We compensate with expertise, which compresses patterns into retrievable units, and with external scaffolding - notes, documents, tools.

Remember psychological motion capture? The idea that expert thinking is more like movement than calculation? This is the context equivalent.

Experts don’t think about everything they know. They think about what’s relevant right now, with the rest of their knowledge available for retrieval if needed.

Progressive disclosure for AI implements the same pattern. Most knowledge stays dormant. Relevant knowledge loads on demand. The system mimics expert cognition not by being smarter, but by managing attention the same way experts do.

The Falsifiable Prediction

Now for the bet I mentioned at the start.

There are two paths the next generation of AI could take:

Path A: Larger context windows, same attention mechanisms. If we get 1M, 5M, 10M token context windows without fundamental changes to how attention works, the lost-in-the-middle problem gets worse, not better. More context means more noise. Progressive disclosure becomes more valuable because the filtering problem intensifies.

Path B: Same context windows, smarter attention. If models get better at weighting relevance across the full context, maybe the architecture becomes less necessary. But this is an extremely hard problem - it’s essentially asking the model to pre-filter its own inputs.

Path C: Massive context windows with perfect attention. This is the only scenario where my architecture becomes obsolete. If a future model can process 6 million tokens and attend to every piece equally well, then yes, dump everything in and let the model sort it out.

I’m betting we don’t get Path C anytime soon. The physics of attention mechanisms work against it. Computational costs scale quadratically with context length. Perfect attention at 6M tokens would require architectural breakthroughs we haven’t seen.

So I’m building for Path A - the most likely future where context remains constrained and managing attention remains essential.

What This Means for Your AI Work

If you’re building AI systems that need to work at scale, here’s the practical takeaway:

Don’t wait for models to solve your context problem. They won’t. At least not in the way you’re hoping.

Instead, build architecture that:

-

Loads context hierarchically. Not everything at once. Levels of abstraction, with deeper levels loading on demand.

-

Encodes relevance in structure. When skill X loads agent Y, that relationship is meaningful. Use structure to pre-filter what’s relevant.

-

Evicts aggressively. Context that’s no longer relevant should leave. Don’t let the window fill with stale information.

-

Caches strategically. If the same context gets requested repeatedly, keep it warm. If it’s one-time, let it go.

This is the same pattern every performant system uses. Databases have query optimizers. CDNs have edge caching. Operating systems have memory hierarchies. AI needs the equivalent.

The infrastructure paradox I wrote about applies here directly. Better models don’t help if your context management is broken. Worse, better models might make you less likely to fix your infrastructure because you assume the model will handle it.

It won’t.

The Attention Budget Mental Model

I want to leave you with a reframe.

Stop thinking about context windows as a technical limitation. Start thinking about them as an attention budget.

Every token you load is a cost. The question isn’t “will this fit?” The question is “is this worth the attention?”

When you frame it this way, the architecture decisions become obvious:

- High-level routing logic? Always worth loading. It directs everything else.

- Specific capabilities? Load when the task demands them.

- Deep specialization? Only when you’re actually doing that specialized work.

- Historical context? Compress and retrieve, don’t store raw.

This mental model transfers to human teams too. Ground truth matters because attention without validation is wasted attention. Brief engineering matters because unclear briefs waste attention on the wrong problems.

The attention economy is the same for humans and AI. Finite resources. Competing demands. The winners are the ones who allocate attention where it matters.

Try It Yourself

The Context Cascade architecture is open source:

Context Cascade - 660+ components, hierarchical loading

Clone it. Look at how the hierarchy is structured. Notice how skills reference agents, how agents embed commands, how the whole thing loads progressively.

Then think about your own AI workflows. What are you loading that doesn’t need to be there? What could load on demand instead of upfront? Where is your context budget being wasted?

Fix those things. You’ll see immediate improvements. Not because your model got smarter - because your architecture stopped fighting against how attention actually works.

I’m building AI infrastructure for teams who can’t afford to wait for the mythical infinite-context model. If your AI workflows hit context limits before they hit capability limits, let’s talk.